-

The Extinction Scenario

-

This is more of a brain dump of random strands of thought than an article-that-makes-a-point.

-

Premise: There's a lot of talk of "AI doom" - a scenario where an AI is created, that wipes out humans, either through malevolence or by-accident-when-given-a-goal.

-

Background Ideas

-

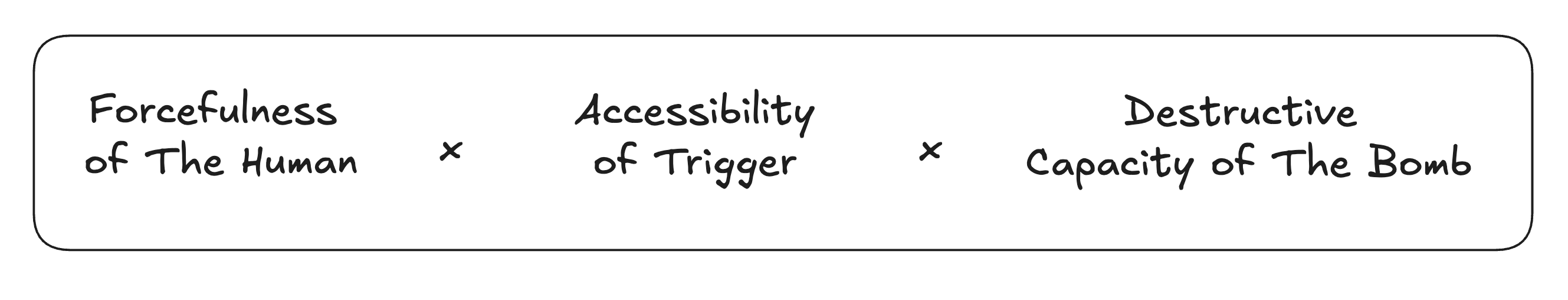

The Destruction Equation

-

Humans are pretty well distributed around the planet. In any scenario where all humans get wiped out, there are three things that would be needed.

-

-

The word "Bomb" in this diagram is can be taken to mean "anything that can wipe out all humans". Currently in this category we have state owned nuclear arsenal and advanced bioweapons.

-

Accessibility of the trigger: How easy is it for someone to access, for example a bioweapon or a state's nuclear codes.

-

Forcefulness of the human: How much will does the person have to follow through. If the trigger is accessible enough, all it would take is someone having a bad day. If the trigger is extremely protected, it would take enormous effort, organization and co-ordination to access it.

-

-

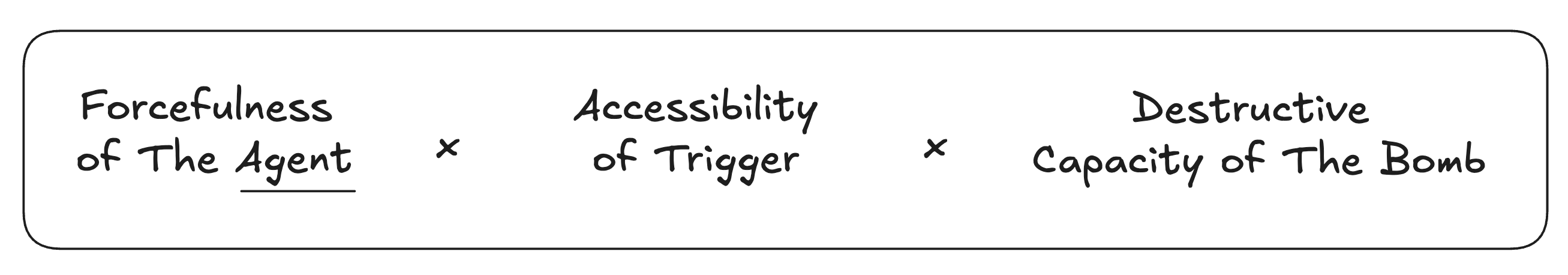

If the question is whether an AI can trigger this, we can replace "Human" with "Agent", which in this case means an entity with agency and intelligence.

-

-

The Forcefulness Equation

-

Zooming in further on the left side, in order for an entity to successfully trigger catastrophe, they would need to

-

Reach a conclusion that they should trigger it (likely with some underpinning theory of why).

-

Figure out how they could trigger it

-

Remain in an observe-narrate-act loop for long enough to undertake all of the actions required (avoid being "shut off")

-

-

A model is not an ego-centric brain

-

How Easy Is It To Destroy all of Humanity?

-

This is an exploration of the likelihood of AI extincting humans and an add-on to this article: The Extinction Scenario